High availability

This section describes how to configure a cluster consisting of two SafeUTM servers.

Each of the two SafeUTM devices is called a node.

The cluster operates in active-passive mode. The node that processes traffic at a given time is active. In its turn, the backup node continuously monitors the status of the active node and transfers the current traffic processing tasks to itself in the absence of communication with the active node. Only one of the nodes can handle traffic at any time.

Network interaction between nodes is carried out via a separate physical channel, for which one physical network card is reserved on each of the nodes. This communication channel is called A cluster network. A keep-alive mechanism is used to maintain communication between nodes.

Node switching occurs in case of failure (complete freezing or reboot) of the active node, as well as in case of loss of communication between nodes over the cluster network.

The cluster has one shared IP on the internal interface and another shared IP on the external interface. Since the MAC addresses of both nodes are different, the Gratuitous ARP mechanism is used.

For the cluster to work correctly, there must be constant communication between nodes.

Cluster operation features:

- Mail will be available for operation only in the mail relay mode. Mailbox storage is disabled.

- Reporting, logging, and monitoring data are not synchronized between nodes. Each node has its own data stored.

- Recovery from backups is not possible.

- It is forbidden to change the names of servers.

- It is forbidden to delete and add network interfaces, but it is ALLOWED to disable and edit them.

- If the provider has the binding by MAC address, then there will be no Internet access when switching nodes.

- To configure clustering, only one SafeUTM license is needed.

Requirements

To create a cluster, the following requirements must be met:

- There can be only 2 SafeUTM nodes in a cluster.

- Both nodes must have the same version of the system identical up to the build number.

- Interfaces on each SafeUTM server must be connected to the same switch or LAN segment (for more information, see step 2, point 2).

- The number of physical network cards used on both servers must be the same. If this condition is not met, you cannot create a cluster.

Configuring Cluster

If at the time of cluster creation you already have a configured SafeUTM, then we recommend choosing it as the active node. All backup node settings will be deleted during cluster creation.

Step 1 - Configuring the backup node

If you have just installed the SafeUTM server

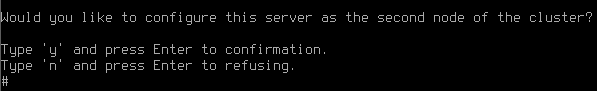

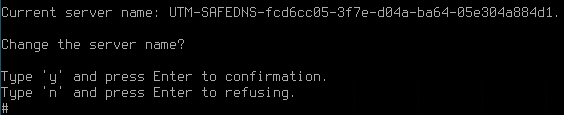

1. When you enter the local menu of the backup node, you will see the following message:

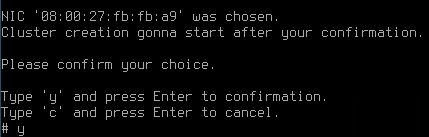

2. Type y and press Enter.

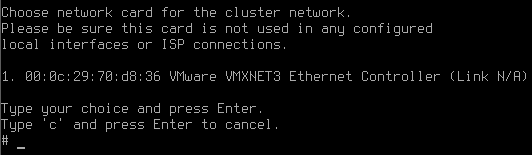

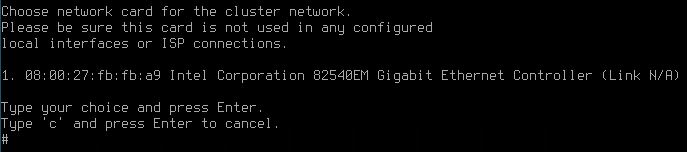

3. Select the network card:

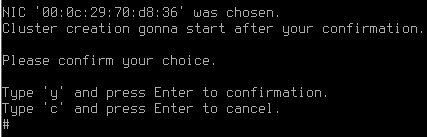

4. Confirm cluster creation by typing y and pressing Enter:

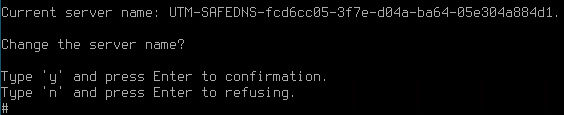

5. UTM will offer to change the name of the server. If you answer the question "Change server name?" positively, an inscription will appear with the suggestion to enter a new server name.

The minimum number of characters in the name is 2.

The maximum number of characters in the name is 42.

Having entered a new name, press Enter to continue the dialog.

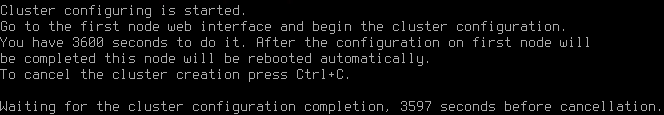

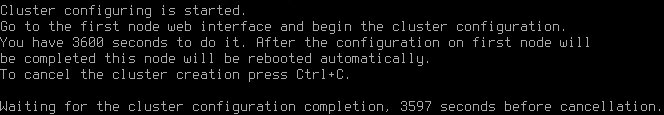

6. A message will appear stating that the cluster creation process has started:

You need to go to the web interface of the active node and perform the settings (see point Configuring Active Node). 3,600 seconds are allocated for this.

If you are creating a backup node from an already installed SafeUTM server with a license and Internet access

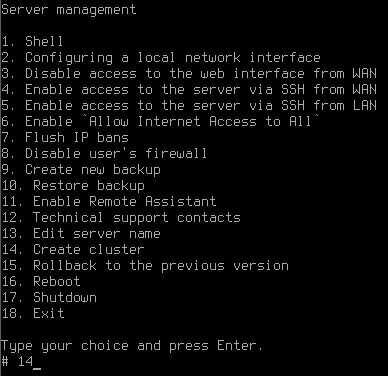

1. Go to the local menu.

2. Select Cluster Creation:

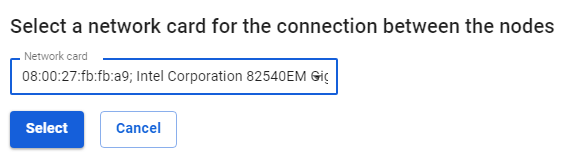

3. Select a free physical network card to create a cluster network and confirm the selection:

4. Confirm cluster creation by typing y and pressing Enter:

5. UTM will offer to change the name of the server. If you answer the question "Change server name?" positively, an inscription will appear with a suggestion to enter a new server name.

The minimum number of characters in the name is 2.

The maximum number of characters in the name is 42.

After entering a new name, press Enter to continue the dialog.

6. A message will appear that the cluster creation process has started.

You need to go to the web interface of the active node and perform the settings (see point Configuring Active Node). 3,600 seconds are allocated for this.

Configuring Active Node

To configure an active node in the SafeUTM web interface, follow these steps:

1. Go to Server Management -> High availability and click Configure active-passive cluster.

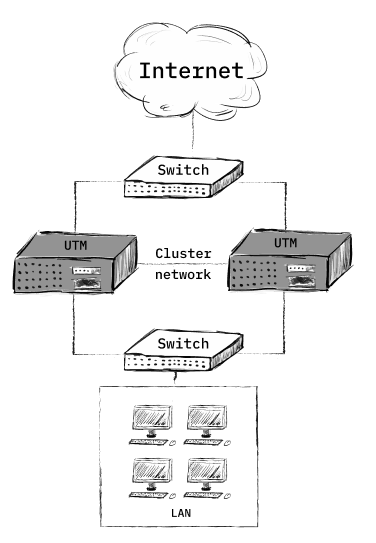

2. Confirm that the topology of your network corresponds to the diagram in the figure below:

3. Select a network card to connect between nodes:

4. Match the network cards. To do this, select one network card in each column and click Match.

5. After applying the settings, the backup node will reboot, and the web interface of the active node will display information that communication with the server has been established.

Backup Node Capabilities:

By going to the local menu of the backup node, you will see that only the following items are available in the server management list:

- Cluster destruction

- Restarting the server

- Disabling the server

- Exit

At the same time, the active node has a fully functional interface and all functionality is available.

Cluster Destruction

You can remove the node from the cluster from the local menu or web interface. At the same time, the node which you are attempting to remove continues to work. The second node resets the settings to the state of the newly installed SafeUTM.

Destroying a cluster from the local menu

Cluster destruction from the web interface

- Go to the section Server Management -> High availability and click on Destroy cluster.

- A warning will appear.

- Click on Yes.

Node Update Procedure

In order to update UTM to the latest version in cluster mode, you need to do the following:

- Start updating the active node. During the update process, it will be rebooted. After the reboot, the backup node will become active, transferring the current traffic processing tasks to itself.

In this case, the cluster will not be operational, since both devices must have the same version of the system, identical up to the build number. - Wait for the active node to download the update and run it. After the update is completed, the cluster will be operational again.